Focal Sampling SGD Biased towards Early Important Samples for Efficient Image Classification with Augmentation Selection

Image credit: Alessio Quercia

Image credit: Alessio Quercia

Abstract

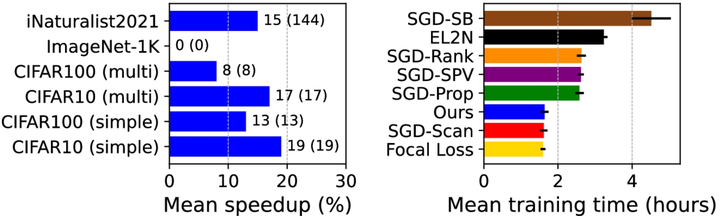

In deep learning, using larger training datasets usually leads to more accurate models. However, simply adding more but redundant data may be inefficient, as some training samples may be more informative than others. We propose Focal Sampling, a method that biases SGD (Stochastic Gradient Descent) towards samples that are found to be more important after a few training epochs, by sampling them more often for the rest of the training. In contrast to state-of-the-art, our approach requires less computational overhead to estimate sample importance, as it computes estimates once during training using the prediction probabilities, and does not require restarting training. In the experimental evaluation, we see that our learning technique trains faster than state-of-the-art and can achieve higher test accuracy, especially when datasets are not well balanced or when using multiple data augmentations. Lastly, results suggest that our approach has intrinsic balancing properties and that balancing datasets based on class importance, rather than by number of samples, can achieve higher test accuracy. Code is available at https://jugit.fz-juelich.de/ias-8/sgd_biased.