Self-Supervised Learning based on Transformed Image Reconstruction for Equivariance-Coherent Feature Representation

Image credit: Qin Wang

Image credit: Qin Wang

Abstract

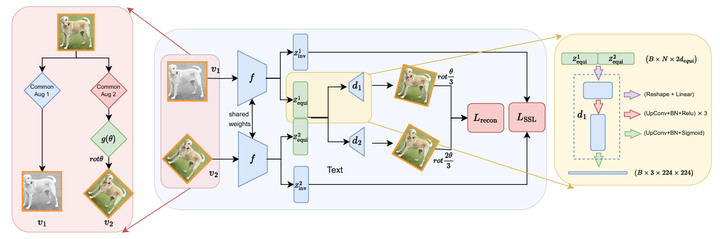

The equivariant behaviour of features is essential in many computer vision tasks, yet popular self-supervised learning (SSL) methods tend to constrain equivariance by design. We propose a self-supervised learning approach where the system learns transformations independently by reconstructing images that have undergone previously unseen transformations. Specifically, the model is tasked to reconstruct intermediate transformed images, e.g. translated or rotated images, without prior knowledge of these transformations. This auxiliary task encourages the model to develop equivariance-coherent features without relying on predefined transformation rules. To this end, we apply transformations to the input image, generating an image pair, and then split the extracted features into two sets per image. One set is used with a usual SSL loss encouraging invariance, the other with our loss based on the auxiliary task to reconstruct the intermediate transformed images. Our loss and the SSL loss are linearly combined with weighted terms. Evaluating on synthetic tasks with natural images, our proposed method strongly outperforms all competitors, regardless of whether they are designed to learn equivariance. Furthermore, when trained alongside augmentation based methods as the invariance tasks, such as iBOT or DINOv2, we successfully learn a balanced combination of invariant and equivariant features. Our approach performs strong on a rich set of realistic computer vision downstream tasks, almost always improving over all baselines.